How I Built an AI Engineering Observer That Scans GitHub and Summarizes What Matters - in 10 min

A real-world walkthrough of turning engineering activity into GPT-powered weekly insight reports (without dashboards or manual digging)

Most engineering managers I know don’t want another dashboard.

They want answers. They want clarity. They want to know:

- What’s slowing my team down?

- Is someone overloaded?

- Are we following good engineering hygiene?

And most importantly:

"Can someone just tell me what matters this week—without me digging through GitHub or Jira?"

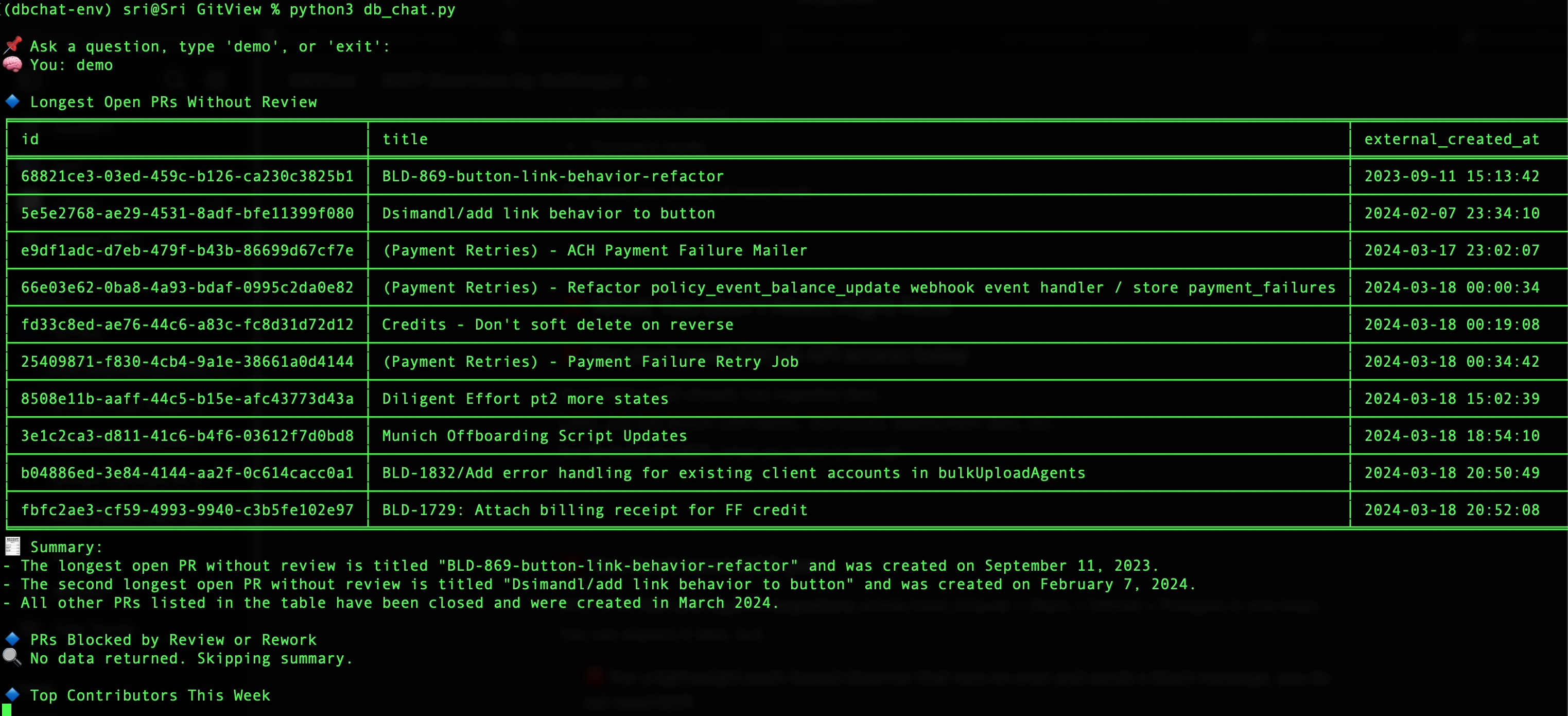

So I built it. A lightweight, AI-powered observer that connects to GitHub, scans real activity, and summarizes what happened, why it matters, and what to do—automatically.

This isn’t a theoretical demo or a GPT playground. This is a production script running against a real company's GitHub data. Here’s how it works.

Step 1: Connect to the Real World (GitHub Data)

In my case, I had access to the GitView production Postgres database, which syncs GitHub activity: pull requests, commits, contributor info, reviews.

Instead of connecting to GitHub APIs directly (which you could), I just queried the database like this:

SELECT id, title, created_at, state, contributor_id

FROM pull_requests

WHERE created_at >= CURRENT_DATE - INTERVAL '7 days';I also fetched contributor names, commit counts, and merged PRs without reviews

Step 2: Shape the Data for GPT

GPT can't make sense of raw DB tables. So I formatted the data into structured JSON, limited to the top 10 entries in each section:

{

"stuck_pull_requests": [...],

"commit_activity": [...],

"contributors_with_no_commits": [...]

}We also fetched contributor names, commit counts, and merged PRs without reviews.

Step 3: Ask GPT the Right Questions

Here’s the exact prompt we used:

You are an engineering insights assistant.

Here is a snapshot of repository activity over the last 7 days:

Group your insights into these categories:

- 🔴 Problems

- 🟡 Watchlist

- ✅ Good News

For each insight:

1. What happened?

2. Why does it matter?

3. What should the team do?

Use contributor names and PR titles. Be concise, actionable, and human.GPT takes this and gives back a structured summary like:

🔴 Problems

PR "Refactor Claims Logic" by John has been open for 12 days without review.

→ This might be blocking dependent work. Suggest assigning a reviewer.

🟡 Watchlist

Mike made 56 commits this week. Others <10. Consider balancing work.

✅ Good News

No PRs merged without review. Team is following code quality standards.

This feels like a real engineering lead wrote it.

Step 4: Output, Deliver, Repeat

I saved the result to a file, but it could easily be piped into Slack or Email:

gitview_autoscan_summary.txtWhy This Isn't Just a Script

This is not about "chatting with your GitHub." It's about having an agent that proactively reports what's going onwithout being asked.

Is it agentic AI? Not fully. It doesn't act on its own (yet). But it's a step toward AI that observes, explains, and eventually helps manage your engineering workflow.

What was the outcome?

I shared this with the engineering leaders who own the data (along with a few more sensitive insights about what managers can do).

To my surprise, their feedback was overwhelmingly positive — they said the insights were not only relevant but actionable. Things like:

- Reassigning PRs

- Identifying blocked work

- Spotting early signs of developer burnout

What’s next?

Imagine this:

- Your Slack gets a Monday morning message saying, “3 PRs are stuck. John’s overloaded. One repo had no reviews last week.”

- You click once to assign reviewers or snooze an insight.

- Over time, it learns what you care about and improves.

That’s the future I want to build. This is step one.

Here’s my hypothesis based on what I hear from clients:

“Don’t make me search for insights or dashboards.

Just tell me what’s going on, why it matters, and how to fix it.”